[AINews] Snowflake Arctic: Fully Open 10B+128x4B Dense-MoE Hybrid LLM • ButtondownTwitterTwitter

Chapters

AI Reddit, Twitter, and Discord Recap

Unique AI Projects and Showcases

Discord Community Highlights

Discord Community Highlights

Hardware Discussion and Troubleshooting on LM Studio Discord

LLaMA Pro Post-Pretraining Methodology

Nsight Compute Analysis and CUDA Tweaks

Eleuther Discord Channels Updates

HuggingFace Community Highlights

Discussions on AI Understanding and Capabilities

Market Reactions and Meta's Q1 Earnings Report

LangChain AI

OpenInterpreter Discussions

Queries and Challenges in AI Discussions

AI Reddit, Twitter, and Discord Recap

The AI Reddit Recap highlighted various advancements in AI, including Nvidia's Align Your Steps technique and Stable Diffusion model comparisons. The AI Twitter Recap discussed partnerships between OpenAI and NVIDIA, Llama 3 and Phi 3 model updates, and the release of Snowflake Arctic model. The AI Discord Recap summarized exciting releases such as Meta's Llama 3 and Microsoft's Phi-3 models, advancements in RAG frameworks, discussions around multimodal models, open-source tooling like Cohere's Toolkit, Model Deployment using Datasette's LLM Python API, and specialized models like Internist.ai 7b.

Unique AI Projects and Showcases

The section highlights various unique AI projects and showcases that have generated excitement in the community. Projects such as the AI-powered text RPG Brewed Rebellion and the 01 project for embedding AI into devices were showcased, with the AI community expressing interest and eagerness towards these innovative initiatives.

Discord Community Highlights

Discussions in various Discord channels have been lively and diverse. In the LAION Discord, conversations revolved around AI models and potential privacy concerns regarding cloud services. The OpenAccess AI Collective (axolotl) Discord focused on advancements in AI technology, such as the Snowflake 408B Dense + Hybrid MoE model, and the promising performance of the Internist.ai 7b model. Over in the LangChain AI Discord, users delved into topics like chatbot enhancements, sharing AI projects, and AI model training tutorials. Meanwhile, the Interconnects Discord saw discussions on AI interpretation models and the ethical implications of AI capabilities. The Mozilla AI Discord community encountered technical issues with llama models, while the DiscoResearch Discord community explored topics like batch processing and language nuances in AI models. Lastly, the Datasette - LLM Discord channel discussed optimizing text embeddings and leveraging tools like Claude for automation. Each Discord community showcased a unique blend of technical insights and thoughtful discussions.

Discord Community Highlights

This section highlights various interactions and discussions from different discord communities related to AI enthusiasts. It includes updates on greetings, meetups, mysterious tweets, AI model implementations, community humor, inappropriate content, and developments in fine-tuning models. The discussions cover a wide range of topics from CLI features to open-source model releases, model training optimization suggestions, and hardware requirements for model training. These exchanges provide insights into the active engagement and evolving interests within the AI community.

Hardware Discussion and Troubleshooting on LM Studio Discord

This section discusses various issues related to hardware, GPU acceleration, and troubleshooting shared on the LM Studio Discord channels. Users reported GPU offload issues, the need for GPU acceleration despite challenges, and solutions for loading errors with Phi-3 128k models. Additionally, discussions include concerns over regressions in software versions impacting usability, high VRAM not aiding in model loading, and selecting the right CPU and GPU for AI tasks. The dialogue emphasizes the importance of power efficiency, RAM upgrades for optimal performance, and troubleshooting techniques for GPU-related errors. The section also highlights ongoing community discussions on LM Studio Discord regarding hardware setups, model loading concerns, and community engagement amidst reported glitches and errors.

LLaMA Pro Post-Pretraining Methodology

Interest is shown in LLaMA Pro's unique post-pretraining method to improve model's knowledge without catastrophic forgetting. Techniques such as QDoRA+FSDP and comparisons with a 141B Mistral model spur examination of the transformer architecture and scaling considerations.

Nsight Compute Analysis and CUDA Tweaks

In this section, users discuss the benefits of using NVIDIA Nsight Compute for in-depth analysis of CUDA applications, uncovering inefficiencies in GPU usage. Through Nsight Compute profiling, users can gain insights on delving deeper into kernel performance issues and selecting a 'full' metric profile for comprehensive information. Updates on CUDA execution since Volta are highlighted, indicating that threads in a warp no longer need to run the exact same instruction, leading to improved execution. Additionally, the section touches on tensor modifications leading to crashes in Triton kernels, incompatibility issues between flash-attn and CUDA libraries, and insights into PyTorch CUDA operations and memory management. The content also links to additional resources for further reading and exploration.

Eleuther Discord Channels Updates

This section highlights updates and discussions from various channels on the Eleuther Discord server. It includes discussions on topics like regression analysis, Chinchilla sampling methodology, LayerNorm and information deletion, Penzai's functionality, Mistral model's performance, tokenization issues, and model comparisons. The section also covers issues with Mixtral 8x7b, image generation using Stability AI, and model performance in China. Additionally, it mentions announcements from OpenRouter and HuggingFace, such as the release of models like Llama 3 and Phi-3, the introduction of datasets like FineWeb, and the availability of conversational AI on iOS devices.

HuggingFace Community Highlights

The HuggingFace community discussions covered a wide range of topics including batching, fine-tuning models, Snowflake's new models, setting up Python virtual environments, custom streaming pipeline integration, and various product launches. Noteworthy discussions included limitations of zero-shot classification, concerns about finetuning Mistral 7b, advancements in RAG frameworks, a new architecture for text-based games, and the release of Apple's OpenELM-270M models. The section also highlighted the announcement of the Trustworthy Language Model (TLM) v1.0, seeking open source STT frontend solutions, and discussions on parallel prompt processing in Large Language Models (LLMs). These discussions showcased ongoing community engagement and collaboration in exploring and utilizing AI technologies.

Discussions on AI Understanding and Capabilities

Discussions on AI's Understanding Capabilities

A deep conversation unfolded regarding whether a model can 'truly understand.' It was noted that logic's unique confluence of syntax and semantics could enable a model to perform operations over meaning, potentially leading to true comprehension. Further, the Turing completeness of autoregressive models, like Transformers, was highlighted for having enough computational power to execute any program.

AI in Language and Society

Addressing the relationship between language and AI, it was argued that language evolution with respect to AI will likely create new concepts for clearer communication in the future. Moreover, language's lossy nature was discussed, considering the limitations it imposes on expressing and translating complete ideas.

On the Horizon: Open Source AI Models

Excitement bubbled about Apple's OpenELM—an efficient, open-source language model family—and its implications for the broader trend of open-source development. Speculations were sparked on whether this signified a shift in Apple’s proprietary stance towards AI and whether other companies might follow.

AI-Assisted Communication Enters Discussions

The integration of AI and communication technology was a topic of interest, featuring technologies like voice-to-text software and custom wake words for home voice assistants. Importance was placed on the need for effective communication flow control in AI interactions, such as mechanisms for interruption and recovery during conversations with virtual assistants.

Exploration of AI-Enhanced Text RPG

A member shared their creation, Brewed Rebellion—an AI-powered text RPG on Playlab.AI, where players navigate the workplace politics involved in unionizing without getting caught by higher management.

Market Reactions and Meta's Q1 Earnings Report

A discussion took place about markets not reacting favorably to Llama 3 coinciding with Meta's Q1 earnings report. It was noted that CEO Mark Zuckerberg's AI comments overlapped with a stock price drop. There was a humorous remark about whether Meta's increased expenditure might be for GPUs for even larger AI models.

LangChain AI

Seeking Template Structure for LLaMA-3:

A member inquired about the existence of headers in the LLaMA-3 prompt template for providing context to questions, referencing the official documentation. Concerns were raised about the completeness of the documentation due to the model's recent release.

Expanding RAG with LangChain:

An article details the integration of adaptive routing, corrective fallback, and self-correction techniques into Retrieval-Augmented Generation (RAG) frameworks using Langchain's LangGraph. Explorations can be further read on Medium.

In Search of Pull Request Partners:

A member inquires about where to request a review for a partner pull request, considering if the share-your-work channel is appropriate for such a discussion.

The Brewed Rebellion:

A new text-based RPG named 'Brewed Rebellion' is shared, encouraging players to navigate workplace politics to form a union as a barista at StarBeans. Check out the intrigue at play.lab.ai.

Introducing Collate:

A platform named Collate has been introduced, transforming saved articles into a daily newsletter digestible in bite-sized form. Feedback is welcome, and you can try it out at collate.one.

Clone of Writesonic and Copy.ai Launched:

BlogIQ, a new app powered by OpenAI and Langchain that aims to simplify the content creation process for bloggers, is now available on GitHub.

OpenInterpreter Discussions

OpenInterpreter Discussions

-

Members report varying levels of success with different models on OpenInterpreter, with Wizard 2 8X22b and gpt 4 turbo as top performers. Models like llama 3 show inconsistency.

-

Confusion exists when executing code locally using different models, with the use of an additional flag mentioned as a solution.

-

Discussion around necessary updates for fixing local models with OpenInterpreter, including advice on specific flags for improvement and redirection to seek help if issues persist.

-

Inquiry into alternatives for building a user interface for an 'AI device,' exploring options beyond tkinter for UI development considering future use with microcontrollers.

-

Sharing of GitHub repositories and papers discussing computer vision models, focusing on lightweight models like moondream and running models like llama3 on different quantization settings to manage VRAM usage.

Queries and Challenges in AI Discussions

The section dives into various user queries and challenges discussed in AI-related communities. It covers topics such as batch generation, prompt nuances in German, difficulties with text summarization constraints, sharing quantifications for models, impressive benchmarks of Phi-3, technical difficulties with DiscoLM-70b, mysteries solved regarding Python API for Datasette's LLM, usage of LLM CLI with Claude for summarizing Hacker News threads, embedding API usage exploration, and divergent JSON mode implementations by Octo AI and Anyscale for open-source models. Additionally, the section addresses context utilization in AI tools and ends with an inappropriate link shared in an AI community.

FAQ

Q: What is Nvidia's Align Your Steps technique?

A: Nvidia's Align Your Steps technique is a method used to improve the performance of AI models by aligning training steps across different GPUs or hardware setups.

Q: What is the Stable Diffusion model in AI?

A: The Stable Diffusion model is a technique used for comparing different AI models based on their stability and performance in generating outputs.

Q: What are some partnerships discussed between OpenAI and NVIDIA in the AI Twitter Recap?

A: The AI Twitter Recap discussed partnerships between OpenAI and NVIDIA, highlighting collaboration efforts between these two prominent organizations in the AI field.

Q: What are some updates about Llama 3 and Phi 3 models in the AI Discord Recap?

A: The AI Discord Recap mentioned updates about Llama 3 and Phi 3 models, showcasing advancements and releases in these AI models within the community.

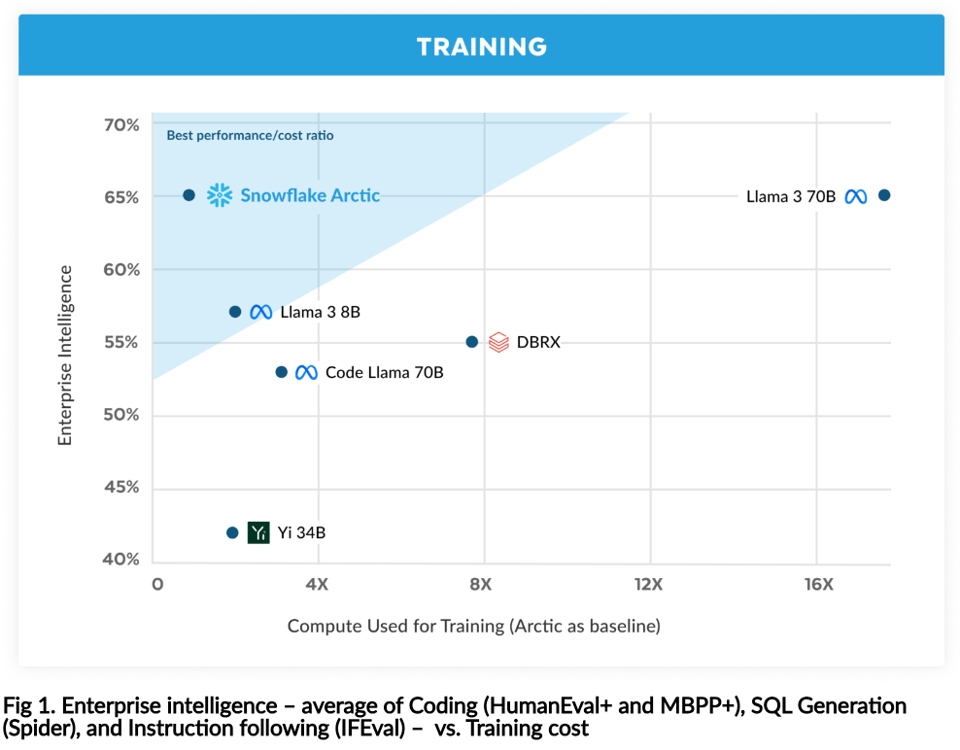

Q: What is the Snowflake Arctic model mentioned in the AI Twitter Recap?

A: The release of the Snowflake Arctic model was discussed in the AI Twitter Recap, indicating a new model introduced to the AI community.

Q: What were the discussions in the LAION Discord community focused on?

A: Conversations in the LAION Discord community revolved around AI models and potential privacy concerns related to cloud services.

Q: What were some topics explored in the LangChain AI Discord community?

A: Users in the LangChain AI Discord delved into topics like chatbot enhancements, sharing AI projects, and AI model training tutorials.

Q: What were some hardware-related discussions on the LM Studio Discord channels?

A: Discussions on the LM Studio Discord channels covered topics such as GPU offload issues, GPU acceleration challenges, and troubleshooting solutions for loading errors with specific models.

Q: What is the LLaMA Pro's unique post-pretraining method mentioned in the essai?

A: LLaMA Pro's unique post-pretraining method aims to improve a model's knowledge without catastrophic forgetting, utilizing techniques like QDoRA+FSDP and comparisons with other models for scaling considerations.

Q: What is NVIDIA Nsight Compute used for in the context of CUDA applications?

A: NVIDIA Nsight Compute is utilized for in-depth analysis of CUDA applications, helping users uncover inefficiencies in GPU usage and gain insights into kernel performance issues.

Q: What were some discussions highlighted in the Eleuthur Discord server?

A: Discussions on the Eleuther Discord server covered topics like regression analysis, sampling methodologies, model performance comparisons, and issues with specific models like Mixtral 8x7b.

Q: What topics were explored in the HuggingFace community discussions?

A: HuggingFace community discussions touched on topics such as batching, fine-tuning models, new model releases, setting up Python environments, and limitations of zero-shot classification.

Q: What was the focus of the discussions on AI's Understanding Capabilities in the essai?

A: The discussions focused on whether AI models can truly understand, highlighting the unique confluence of syntax and semantics in logic that can enable models to perform operations over meaning.

Q: What were the key points discussed about AI in Language and Society?

A: The discussions addressed the relationship between language and AI, highlighting language evolution, its lossy nature, and the impact on expressing complete ideas.

Q: What was the buzz about regarding Apple's OpenELM in the essai?

A: The essai sparked excitement around Apple's OpenELM, an efficient and open-source language model family, with speculations on its implications for the trend of open-source AI development.

Q: What technology integration was a topic of interest in the AI discussions?

A: The integration of AI and communication technology, including voice-to-text software and custom wake words for home voice assistants, was discussed.

Q: What was the AI-powered text RPG shared in the Playlab.AI platform called?

A: The AI-powered text RPG shared on Playlab.AI was named 'Brewed Rebellion,' where players navigate workplace politics involved in unionizing as a barista at StarBeans.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!